I am disheartened by the misinterpretation of Clearview AI’s technology to society.”Ĭlearview did not respond to questions about whether it intends to pay, or contest, the $9.4 million fine from the U.K. and their people by assisting law enforcement in solving heinous crimes against children, seniors, and other victims of unscrupulous acts … We collect only public data from the open internet and comply with all standards of privacy and law. Ton-That added: “My company and I have acted in the best interests of the U.K. “For example, a group photo posted publicly on social media or in a newspaper might not even include the names of the people in the photo, let alone any information that can determine with any level of certainty if that person is a resident of a particular country.” In response to TIME’s questions about whether the same applied to the rulings by the French and Italian agencies, Clearview’s spokesperson pointed back to Ton-That’s statement. “It is impossible to determine the residency of a citizen from just a public photo from the open internet,” he said. In an emailed statement, Clearview’s CEO Hoan Ton-That indicated that this was because the company has no way of knowing where people in the photos live. privacy regulator John Edwards said Clearview had informed his office that it cannot comply with his order to delete U.K. The French and Italian agencies did not respond to questions about whether the company has complied. data protection agencies, including in France. Similar orders have been filed by other E.U. In February, Italy’s data protection agency fined the company 20 million euros ($21 million) and ordered the company to delete data on Italian residents. It wasn’t the first time Clearview has been reprimanded by regulators.

“This is a clear case where you need a transnational agreement.”

“As long as there are no international agreements, there is no way of enforcing things like what the ICO is trying to do,” Birhane says. user.īut it is not clear whether Clearview will pay the fine, nor comply with that order. That would ensure its system could no longer identify a picture of a U.K. regulator ordered Clearview to delete all data it collected from U.K. In addition to the $9.4 million fine, the U.K.

#Clearview facial recognition software drivers

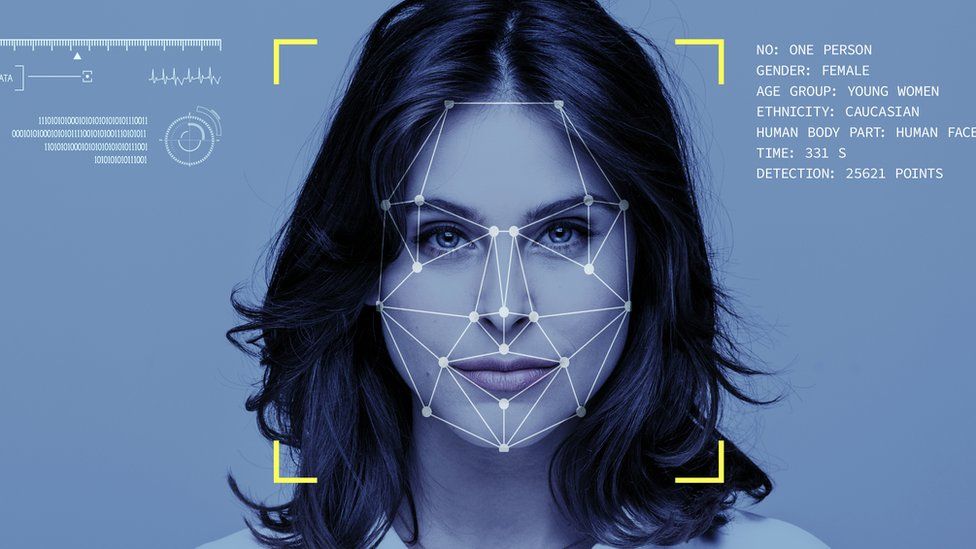

Read More: Uber Drivers Say a ‘Racist’ Facial Recognition Algorithm Is Putting Them Out of Work And privacy advocates say that even if those biases are eliminated, the data could be stolen by hackers or enable new forms of intrusive surveillance by law enforcement or governments. Advocates for responsible uses of AI say that facial recognition technology often disproportionately misidentifies people of color, making it more likely that law enforcement agencies using the database could arrest the wrong person. “Clearview AI’s investigative platform allows law enforcement to rapidly generate leads to help identify suspects, witnesses and victims to close cases faster and keep communities safe,” the company says on its website.īut Clearview has faced other intense criticism, too.

The company says its tools are designed to keep people safe. “And when it comes to the people whose images are in their data sets, they are not aware that their images are being used to train machine learning models. They don’t ask for consent,” says Abeba Birhane, a senior fellow for trustworthy AI at Mozilla. The vast majority of people have no idea their photographs are likely included in the dataset that Clearview’s tool relies on.

0 kommentar(er)

0 kommentar(er)